Tuesday Poster Session

Category: Practice Management

P4053 - Using AI-Generated Patient Information Sheets on Colonoscopies to Improve Doctor-Patient Communication

Tuesday, October 24, 2023

10:30 AM - 4:00 PM PT

Location: Exhibit Hall

Has Audio

- LC

Louis Collins, BS, BA

Rutgers New Jersey Medical School

Newark, New Jersey

Presenting Author(s)

Louis Collins, BS, BA1, Lisa M. Pinero, BS2, Emily Keenan, BA3, Diana Finkel, DO4

1Rutgers New Jersey Medical School, Newark, NJ; 2New Jersey Medical School, Miami, FL; 3Rutgers New Jersey Medical School, Morristown, NJ; 4New Jersey Medical School, Newark, NJ

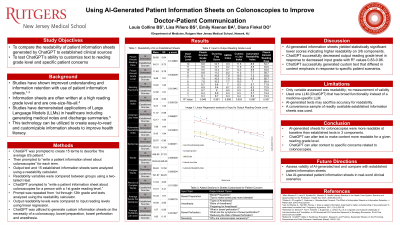

Introduction: Large Language Models (LLMs) such as ChatGPT have the potential to revolutionize hospital work-flow and improve patient-provider communication due to their ability to create customizable, accessible text. The objective of this study was to compare the readability of patient information sheets generated by ChatGPT to those from established clinical sources like UptoDate, and to test ChatGPT’s ability to customize text to different reading comprehension levels and specific patient concerns.

Methods: Patient information sheets were generated using OpenAI’s ChatGPT. ChatGPT was first prompted to create multiple terms to describe “the average US patient.” Terms were then inserted into the prompt, “Write a patient information sheet about colonoscopies for the (generated term).” The AI-generated texts were then analyzed using a web-based readability calculator. Established patient information sheets were obtained from commonly used clinical databases and internet sources and analyzed using the readability calculator. Output readability variables were compared between groups using a two-tailed t-test. Second, ChatGPT was prompted to, “Write a patient information sheet about colonoscopies for a person with a 1st grade reading level.” This prompt was repeated from 1st to 12th grade, and generated texts were analyzed using the readability calculator. Output readability levels were compared to input reading levels using linear regression. Finally, custom patient information sheets that addressed specific patient concerns regarding the necessity of a colonoscopy, anxiety about bowel preparation, bowel perforation and anesthesia were generated using ChatGPT.

Results: AI-generated patient information sheets yielded statistically significant lower scores on 3/6 readability components, indicating that in these areas, AI-generated texts were more readable in comparison to established texts. ChatGPT did successfully decrease reading grade level measurements in response to input reading grade in all six components with R2 values ranging from 0.83-0.95. Finally, ChatGPT did generate custom prompts to specific patient scenario inputs that differed in content emphasis.

Discussion: This study comparing AI-generated patient information sheets to established online patient sheets highlights the capability of LLMs like ChatGPT to create easy to read and customizable patient information that both improves patient-physician communication and enhances personal health literacy.

Disclosures:

Louis Collins, BS, BA1, Lisa M. Pinero, BS2, Emily Keenan, BA3, Diana Finkel, DO4. P4053 - Using AI-Generated Patient Information Sheets on Colonoscopies to Improve Doctor-Patient Communication, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.

1Rutgers New Jersey Medical School, Newark, NJ; 2New Jersey Medical School, Miami, FL; 3Rutgers New Jersey Medical School, Morristown, NJ; 4New Jersey Medical School, Newark, NJ

Introduction: Large Language Models (LLMs) such as ChatGPT have the potential to revolutionize hospital work-flow and improve patient-provider communication due to their ability to create customizable, accessible text. The objective of this study was to compare the readability of patient information sheets generated by ChatGPT to those from established clinical sources like UptoDate, and to test ChatGPT’s ability to customize text to different reading comprehension levels and specific patient concerns.

Methods: Patient information sheets were generated using OpenAI’s ChatGPT. ChatGPT was first prompted to create multiple terms to describe “the average US patient.” Terms were then inserted into the prompt, “Write a patient information sheet about colonoscopies for the (generated term).” The AI-generated texts were then analyzed using a web-based readability calculator. Established patient information sheets were obtained from commonly used clinical databases and internet sources and analyzed using the readability calculator. Output readability variables were compared between groups using a two-tailed t-test. Second, ChatGPT was prompted to, “Write a patient information sheet about colonoscopies for a person with a 1st grade reading level.” This prompt was repeated from 1st to 12th grade, and generated texts were analyzed using the readability calculator. Output readability levels were compared to input reading levels using linear regression. Finally, custom patient information sheets that addressed specific patient concerns regarding the necessity of a colonoscopy, anxiety about bowel preparation, bowel perforation and anesthesia were generated using ChatGPT.

Results: AI-generated patient information sheets yielded statistically significant lower scores on 3/6 readability components, indicating that in these areas, AI-generated texts were more readable in comparison to established texts. ChatGPT did successfully decrease reading grade level measurements in response to input reading grade in all six components with R2 values ranging from 0.83-0.95. Finally, ChatGPT did generate custom prompts to specific patient scenario inputs that differed in content emphasis.

Discussion: This study comparing AI-generated patient information sheets to established online patient sheets highlights the capability of LLMs like ChatGPT to create easy to read and customizable patient information that both improves patient-physician communication and enhances personal health literacy.

Disclosures:

Louis Collins indicated no relevant financial relationships.

Lisa Pinero indicated no relevant financial relationships.

Emily Keenan indicated no relevant financial relationships.

Diana Finkel indicated no relevant financial relationships.

Louis Collins, BS, BA1, Lisa M. Pinero, BS2, Emily Keenan, BA3, Diana Finkel, DO4. P4053 - Using AI-Generated Patient Information Sheets on Colonoscopies to Improve Doctor-Patient Communication, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.