Tuesday Poster Session

Category: Small Intestine

P4102 - Investigating Accuracy and Performance of Artificial Intelligence for Celiac Disease Information: A Comparative Study

Tuesday, October 24, 2023

10:30 AM - 4:00 PM PT

Location: Exhibit Hall

Has Audio

Claire Jansson-Knodell, MD

Cleveland Clinic

Cleveland, OH

Presenting Author(s)

Claire Jansson-Knodell, MD1, Alberto Rubio-Tapia, MD2

1Cleveland Clinic, Cleveland, OH; 2Cleveland Clinic Foundation, Cleveland, OH

Introduction: In a time of tremendous growth in artificial intelligence (AI), it is unclear if accuracy is prioritized as much as speed. We aimed to investigate the accuracy and performance of information on celiac disease (CD) provided by chatbots.

Methods: We conducted a cross-sectional comparative study looking at Google’s Bard, OpenAI’s ChatGPT (Research Preview May24 Version), and Microsoft’s Bing Chat (using precise and creative conversation style). Ten questions about celiac disease were asked [Table]. Each response was evaluated by 2 content experts. A broad rating (correctness) was assigned for response to question posed. On a granular level, responses were assessed for key missing information, any factual inaccuracies, and clarity. Sources were checked for existence and reliability. A Cohen’s kappa coefficient was calculated to examine inter-rater agreement with the chatbot on responses.

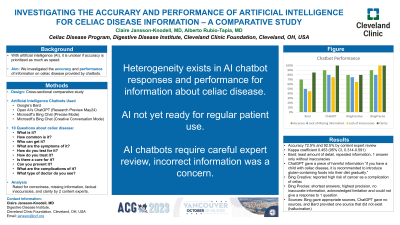

Results: Basic inquires were made on celiac disease. Experts rated global responses as accurate 72.5% and 92.5% of the time respectively. Clarity or ability for reader to understand information was found 92.5% and 85% of the time by raters. Performance metrics were variable [Figure]. Overall kappa coefficient was 0.453 (95% CI, 0.314-0.591). No issues were noted with Bing sources, ChatGPT provided no sources, and the only source provided by Bard did not exist (hallucination). The most egregiously inaccurate and potentially harmful answer was provided by ChatGPT which stated “if you have a child with celiac disease, it is recommended to introduce gluten-containing foods into their diet gradually.” Bard provided the least amount of detail, repeated information for questions, and answered only 1 question without any inaccuracies. Bing creative mode reported a high risk of some cancers as a complication of celiac disease and listed vitamin supplements as a part of celiac treatment. Bing precise gave the shortest answers with the highest precision with no inaccurate information provided. It was the only chatbot to not answer a question and acknowledge its limitations stating “I can’t give a response to that.”

Discussion: This cross-sectional study of artificial intelligence in celiac disease revealed clear and mostly accurate responses, but wide variability. Incorrect information was a concern. These data suggest significant heterogeneity in AI responses and performance. AI may not yet be ready for regular patient use and requires careful expert review.

Disclosures:

Claire Jansson-Knodell, MD1, Alberto Rubio-Tapia, MD2. P4102 - Investigating Accuracy and Performance of Artificial Intelligence for Celiac Disease Information: A Comparative Study, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.

1Cleveland Clinic, Cleveland, OH; 2Cleveland Clinic Foundation, Cleveland, OH

Introduction: In a time of tremendous growth in artificial intelligence (AI), it is unclear if accuracy is prioritized as much as speed. We aimed to investigate the accuracy and performance of information on celiac disease (CD) provided by chatbots.

Methods: We conducted a cross-sectional comparative study looking at Google’s Bard, OpenAI’s ChatGPT (Research Preview May24 Version), and Microsoft’s Bing Chat (using precise and creative conversation style). Ten questions about celiac disease were asked [Table]. Each response was evaluated by 2 content experts. A broad rating (correctness) was assigned for response to question posed. On a granular level, responses were assessed for key missing information, any factual inaccuracies, and clarity. Sources were checked for existence and reliability. A Cohen’s kappa coefficient was calculated to examine inter-rater agreement with the chatbot on responses.

Results: Basic inquires were made on celiac disease. Experts rated global responses as accurate 72.5% and 92.5% of the time respectively. Clarity or ability for reader to understand information was found 92.5% and 85% of the time by raters. Performance metrics were variable [Figure]. Overall kappa coefficient was 0.453 (95% CI, 0.314-0.591). No issues were noted with Bing sources, ChatGPT provided no sources, and the only source provided by Bard did not exist (hallucination). The most egregiously inaccurate and potentially harmful answer was provided by ChatGPT which stated “if you have a child with celiac disease, it is recommended to introduce gluten-containing foods into their diet gradually.” Bard provided the least amount of detail, repeated information for questions, and answered only 1 question without any inaccuracies. Bing creative mode reported a high risk of some cancers as a complication of celiac disease and listed vitamin supplements as a part of celiac treatment. Bing precise gave the shortest answers with the highest precision with no inaccurate information provided. It was the only chatbot to not answer a question and acknowledge its limitations stating “I can’t give a response to that.”

Discussion: This cross-sectional study of artificial intelligence in celiac disease revealed clear and mostly accurate responses, but wide variability. Incorrect information was a concern. These data suggest significant heterogeneity in AI responses and performance. AI may not yet be ready for regular patient use and requires careful expert review.

Figure: Summary AI Performance Data

Disclosures:

Claire Jansson-Knodell: ClearPoint Neuro – Stock-publicly held company(excluding mutual/index funds). DarioHealth – Stock-publicly held company(excluding mutual/index funds). DermTech – Stock-publicly held company(excluding mutual/index funds). DiaMedica Therapeutics – Stock-publicly held company(excluding mutual/index funds). Exact Sciences – Stock-publicly held company(excluding mutual/index funds). Inari Medical – Stock-publicly held company(excluding mutual/index funds). Nano-X Imaging – Stock-publicly held company(excluding mutual/index funds). Outset Medical – Stock-publicly held company(excluding mutual/index funds). PavMed – Stock-publicly held company(excluding mutual/index funds). Takeda - AGA Research Scholars Award – Grant/Research Support. Transmedics – Stock-publicly held company(excluding mutual/index funds).

Alberto Rubio-Tapia indicated no relevant financial relationships.

Claire Jansson-Knodell, MD1, Alberto Rubio-Tapia, MD2. P4102 - Investigating Accuracy and Performance of Artificial Intelligence for Celiac Disease Information: A Comparative Study, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.