Monday Poster Session

Category: General Endoscopy

P1985 - Impact of Reviewers’ Characteristics and Training Programs on Inter-Observer Variability of Endoscopic Scoring Systems: A Systematic Review and Meta-Analysis

Monday, October 23, 2023

10:30 AM - 4:15 PM PT

Location: Exhibit Hall

Has Audio

Jana G. Al Hashash, MD, MSc, FACG

Associate Professor

Mayo Clinic

Jacksonville, FL

Presenting Author(s)

Jana G.. Hashash, MD, MSc, FACG1, Francis A. Farraye, MD, MSc, MACG1, Yeli Wang, PhD2, Daniel Colucci, BS2, Shrujal Baxi, MD2, Sadaf Muneer, MS2, Faye Yu Ci Ng, BS2, Pratik Shingru, MD2, Gil Melmed, MD, MS3

1Mayo Clinic, Jacksonville, FL; 2Iterative Health Inc., Cambridge, MA; 3Center for Inflammatory Bowel Diseases, Cedars-Sinai Medical Center, Los Angeles, CA

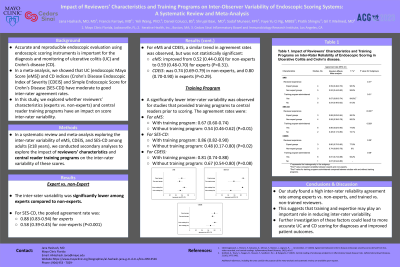

Introduction: Accurate and reproducible endoscopic evaluation using endoscopic scoring instruments is important for the diagnosis and monitoring of ulcerative colitis (UC) and Crohn’s disease (CD). In a meta-analysis, we showed that UC (endoscopic Mayo Score [eMS]) and CD indices (Crohn's Disease Endoscopic Index of Severity [CDEIS] and Simple Endoscopic Score for Crohn's Disease [SES-CD]) have moderate to good inter-rater agreement rates. In this study, we explored whether reviewers’ characteristics (experts vs. non-experts) and central reader training programs have an impact on score inter-rater variability.

Methods: In a systematic review and meta-analysis exploring the inter-rater variability of eMS, CDEIS, and SES-CD among adults (≥18 years), we conducted secondary analyses to explore the impact of reviewers’ characteristics and central reader training programs on the inter-rater variability of these scores.

Results: The inter-rater variability was significantly lower among experts compared to non-experts. For SES-CD, the pooled agreement rate was 0.88 (0.83-0.94) for experts and 0.58 (0.39-0.45) for non-experts (P< 0.001). or eMS and CDEIS, a similar trend was observed but was not statistically significant. For eMS, the agreement rate improved from 0.52 (0.44-0.60) for non-experts, to 0.59 (0.48-0.70) for experts (P=0.31); for CDEIS, it was 0.74 (0.69-0.79) in non-experts, and 0.80 (0.70-0.90) in experts (P=0.29).

A significantly lower inter-rater variability was observed for studies that provided training programs to central readers prior to scoring the disease activity relative to. studies without such training. For eMS, the agreement rate was 0.67 (0.60-0.74) for studies with reader training compared to 0.54 (0.46-0.62) for studies without a training program(P=0.01). For SES-CD, the agreement rate for studies with a training program was 0.86 (0.82-0.90) and 0.48 (0.17-0.80) for studies without a training program (P=0.02). For CDEIS, agreement was 0.81 (0.74-0.88) with training and 0.67 (0.54-0.80) without training,(P=0.08).

Discussion: Our study found a high inter-rater reliability agreement rate among experts vs. non-experts, and trained vs non-trained reviewers. This suggests that training and experts may play an important role in reducing inter-rater variability. Further investigation of these factors could lead to more accurate UC and CD scoring for diagnoses and improved patient outcomes.

Disclosures:

Jana G.. Hashash, MD, MSc, FACG1, Francis A. Farraye, MD, MSc, MACG1, Yeli Wang, PhD2, Daniel Colucci, BS2, Shrujal Baxi, MD2, Sadaf Muneer, MS2, Faye Yu Ci Ng, BS2, Pratik Shingru, MD2, Gil Melmed, MD, MS3. P1985 - Impact of Reviewers’ Characteristics and Training Programs on Inter-Observer Variability of Endoscopic Scoring Systems: A Systematic Review and Meta-Analysis, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.

1Mayo Clinic, Jacksonville, FL; 2Iterative Health Inc., Cambridge, MA; 3Center for Inflammatory Bowel Diseases, Cedars-Sinai Medical Center, Los Angeles, CA

Introduction: Accurate and reproducible endoscopic evaluation using endoscopic scoring instruments is important for the diagnosis and monitoring of ulcerative colitis (UC) and Crohn’s disease (CD). In a meta-analysis, we showed that UC (endoscopic Mayo Score [eMS]) and CD indices (Crohn's Disease Endoscopic Index of Severity [CDEIS] and Simple Endoscopic Score for Crohn's Disease [SES-CD]) have moderate to good inter-rater agreement rates. In this study, we explored whether reviewers’ characteristics (experts vs. non-experts) and central reader training programs have an impact on score inter-rater variability.

Methods: In a systematic review and meta-analysis exploring the inter-rater variability of eMS, CDEIS, and SES-CD among adults (≥18 years), we conducted secondary analyses to explore the impact of reviewers’ characteristics and central reader training programs on the inter-rater variability of these scores.

Results: The inter-rater variability was significantly lower among experts compared to non-experts. For SES-CD, the pooled agreement rate was 0.88 (0.83-0.94) for experts and 0.58 (0.39-0.45) for non-experts (P< 0.001). or eMS and CDEIS, a similar trend was observed but was not statistically significant. For eMS, the agreement rate improved from 0.52 (0.44-0.60) for non-experts, to 0.59 (0.48-0.70) for experts (P=0.31); for CDEIS, it was 0.74 (0.69-0.79) in non-experts, and 0.80 (0.70-0.90) in experts (P=0.29).

A significantly lower inter-rater variability was observed for studies that provided training programs to central readers prior to scoring the disease activity relative to. studies without such training. For eMS, the agreement rate was 0.67 (0.60-0.74) for studies with reader training compared to 0.54 (0.46-0.62) for studies without a training program(P=0.01). For SES-CD, the agreement rate for studies with a training program was 0.86 (0.82-0.90) and 0.48 (0.17-0.80) for studies without a training program (P=0.02). For CDEIS, agreement was 0.81 (0.74-0.88) with training and 0.67 (0.54-0.80) without training,(P=0.08).

Discussion: Our study found a high inter-rater reliability agreement rate among experts vs. non-experts, and trained vs non-trained reviewers. This suggests that training and experts may play an important role in reducing inter-rater variability. Further investigation of these factors could lead to more accurate UC and CD scoring for diagnoses and improved patient outcomes.

Disclosures:

Jana Hashash: Iterative Health – Grant/Research Support.

Francis Farraye: AbbVie – Advisory Committee/Board Member. Avalo Therapeutics – Advisory Committee/Board Member. BMS – Advisory Committee/Board Member. Braintree Labs – Advisory Committee/Board Member. Fresenius Kabi – Advisory Committee/Board Member. GI Reviewers – Independent Contractor. GSK – Advisory Committee/Board Member. IBD Educational Group – Independent Contractor. Iterative Health – Advisory Committee/Board Member. Janssen – Advisory Committee/Board Member. Pfizer – Advisory Committee/Board Member. Pharmacosmos – Advisory Committee/Board Member. Sandoz Immunology – Advisory Committee/Board Member. Sebela – Advisory Committee/Board Member. Viatris – Advisory Committee/Board Member.

Yeli Wang: Iterative Health – Employee, Stock Options.

Daniel Colucci: Iterative Health – Employee, Stock Options.

Shrujal Baxi: Iterative Health – Employee, Stock Options.

Sadaf Muneer: Iterative Health – Employee, Stock Options.

Faye Yu Ci Ng: Iterative Health – Employee.

Pratik Shingru: Iterative Health – Employee, Stock Options.

Gil Melmed: AbbVie – Consultant. Amgen – Consultant. Arena – Consultant. BI – Consultant. BMS – Consultant. Dieta – Consultant, Owner/Ownership Interest. Eli Lilly – Employee. Ferring – Consultant. Fresenius Kabi – Consultant. Genetech – Consultant. Gilead – Consultant. Janseen – Consultant. Oshi – Consultant, Owner/Ownership Interest. Pfizer – Consultant, Grant/Research Support. Prometheus Labs – Consultant. Samsung Bioepis – Consultant. Takeda – Consultant. Techlab – Consultant. Viatris – Consultant.

Jana G.. Hashash, MD, MSc, FACG1, Francis A. Farraye, MD, MSc, MACG1, Yeli Wang, PhD2, Daniel Colucci, BS2, Shrujal Baxi, MD2, Sadaf Muneer, MS2, Faye Yu Ci Ng, BS2, Pratik Shingru, MD2, Gil Melmed, MD, MS3. P1985 - Impact of Reviewers’ Characteristics and Training Programs on Inter-Observer Variability of Endoscopic Scoring Systems: A Systematic Review and Meta-Analysis, ACG 2023 Annual Scientific Meeting Abstracts. Vancouver, BC, Canada: American College of Gastroenterology.